But regarding the pause on AI, the following video talks about the motivation behind the pause.

It turns out that the ideas about

Moloch actually played a major role.

I've been thinking about what it would take to slay

Moloch, and I believe that making the resources need for input in order to provide the resources for meeting human needs need to get increasingly smaller.

The metric would be : (Needs per person)/(resources spent per person). You can use whatever units makes sense. The lower this number the better society is doing(and the worse Moloch is doing), the greater this number the worse society is doing (and the more Moloch is winning).

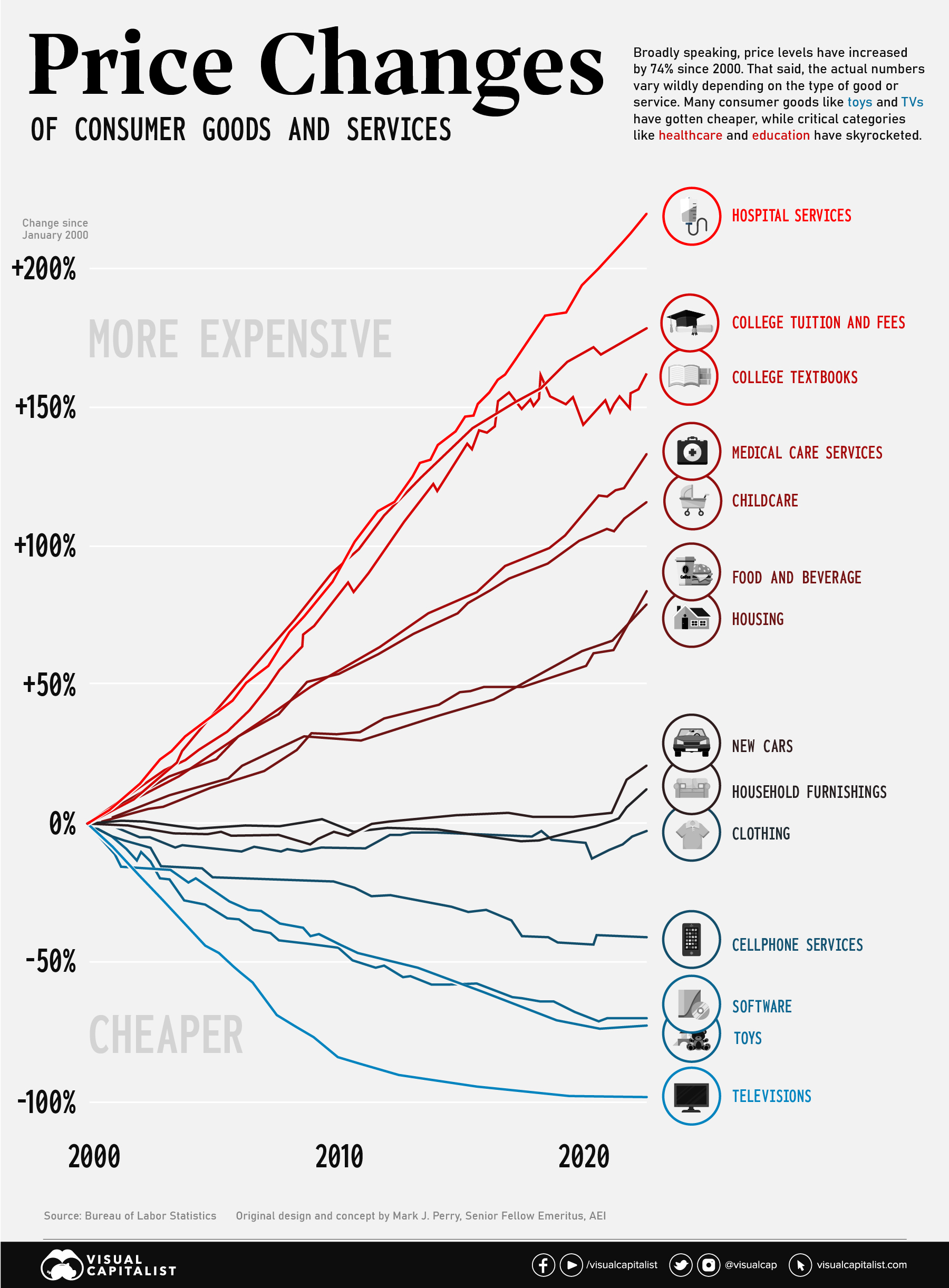

For instance if we do this in money (though it need not be):

(Money needed per person to meet needs)/(Per capita GDP)

The lower this is, the less of each person's income is needed just to meet needs. This includes housing, food, clothing, healthcare, education, safety needs, belonging and connecting.

Can we really figure this out in 6 months?

I posted this elsewhere, but here is Moloch by the numbers. This is the beast that needs slaying. "AGI" is just a proxy:

Edit: I realize we would need to define a need vs want, as it can get nuanced. I think a good visual is a pie chart for CPI/PCE. There are things not in the pie that once can certainly say are not needs. We can disagree, but I think some thought on how to actually do this separation of the structure of demand would go a long ways no matter what economic system we use.

There are some categories that are not needs:

1) Tabacco

2) Somethings in "other" that would probably count also.

3) Things not in the basket defining CPI/PCE are probably not needs.

4) Many forms of recreation. Though psychological health would be a need.

There are some things that seem like needs, especially since substitute goods are placed in the basket as a matter of course.

1) Housing, though size and type of house needs to be considered. In many places, affordable housing is illegal to build.

2) Food and beverage. Again choice of what is needed comparing home cooked vs dining vs fine dining.

3) Medical care. Elective care not-withstanding.

4) Education. Level, quality, scope, and type should be considered.

5) Transportation for work and getting other necessities.

6) Basic clothing (apparel). Though I think people probably over-consume this.

My main point is that economists should be tracking some number independent of CPI/PCE and GDP that can measure needs in aggregate. This basket is pretty complex, I don't see why a

needs basket of goods and services couldn't be defined by some economic organization. Maybe some forum members are aware of one.

I suppose the closest metric we have to the metric I defined is the inverse of "real" GDP. But I don't believe that CPI or PCE really captures needs all that well. Frankly, I think using housing cost by itself and using some multiple would be much closer to the real feeling people have in inflation. Or medical care times some multiple for older folks.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24347781/STK095_Microsoft_03.jpg)